Featured Past Articles

TETE-A-TETE

WITH THE TECHNICAL SALES MANAGER DOW AGROSCIENCES

Looking back over the past twenty four months Oscar shilliebo technical sales manager Dow Agrosciences, is proud of the company’s per formance even though it has been exceptionally difficult year all round in the industry. With the next year underway and plenty of strategic plans in place, Shilliebo looks on with anticipation to the next twelve months.

Looking back over the past twenty four months Oscar shilliebo technical sales manager Dow Agrosciences, is proud of the company’s per formance even though it has been exceptionally difficult year all round in the industry. With the next year underway and plenty of strategic plans in place, Shilliebo looks on with anticipation to the next twelve months.

Some years back, Integrated Pest Management (IPM) had become a buzzword in the floriculture industry. It was the ideal concept to subscribe to and everyone ached to be seen either as an adopter or advocate of IPM. Nevertheless, beyond the hype, we have witnessed positive progress in the manner which Kenyan flower growers are implementing IPM measures in their pest control programs. However, it is still not exactly clear to some people what really constitutes IPM. The UN’s Food and Agriculture Organisation (FAO) defines IPM as “the careful consideration of all available pest control techniques and subsequent integration of appropriate measures that discourage the development of pest populations and keep pesticides and other interventions to levels that are economically justified and reduce or minimize risks to human health and the environment. IPM emphasizes the growth of a healthy crop with the least possible disruption to agro-ecosystems and encourages natural pest control mechanisms.”

Read more: How to Reduce Pesticide Use in Floriculture through IPM

The 6th edition of the HortiFlora Ethiopia, an International Horticultural show successfully took place from March 25 to 27, 2015 at Millennium Hall, Bole Addis Ababa Ethiopia. The event was colourfully opened by the Ethiopian Prime Minister Hailemariam Desalegn. Also present were senior government officials, civil society organizations and other stakeholders.

The Prime Minister said that Ethiopia’s long-term development strategy envisages agriculture-cantered development bringing fast economic progress benefiting the people and laying foundation for industrial development.

Revolutionize Packaging For The Flower Industry

There comes a time in the business world when integrity, honesty, openness, personal excellence, continual self-improvement, and mutual respect become valued constituents for things to move in the right direction. With these core beliefs, Silpack readily takes on all challenges, and pride themselves on seeing them through and holding themselves accountable to their customers, honoring their commitments, and striving for the highest quality in new and current developments.

There comes a time in the business world when integrity, honesty, openness, personal excellence, continual self-improvement, and mutual respect become valued constituents for things to move in the right direction. With these core beliefs, Silpack readily takes on all challenges, and pride themselves on seeing them through and holding themselves accountable to their customers, honoring their commitments, and striving for the highest quality in new and current developments.

Undervalued, neglected resource

Undervalued, the soil has become politically and physically neglected, triggering its degradation due to erosion, compaction, salinization, soil organic matter and nutrient depletion, acidification, pollution and other processes caused by unsustainable land management practices. The irony is that the main culprit of soil degradation is the very thing that most relies on healthy soils: agriculture. Industrial agriculture’s intensive production systems, which rely on the heavy application of synthetic fertilizers and pesticides, have depleted soil to the point that we are in danger of losing significant portions of arable land.

It is estimated that on nearly one-third of the earth’s land area, land degradation reduces the productive capacity of agricultural land by eroding topsoil and depleting nutrients resulting in enormous environmental, social and economic costs. Most critically, land degradation reduces soil fertility leading to lower yields.

In Africa, the United Nations paints a graver picture: 65% of arable land, 30% of grazing land and 20% of forests are already degraded. Locally, there has been concern in the recent years about the state of Kenyan soils and the decline in yields in some parts of the country attributed to soil health. The first national soil test was carried out across the country and the results released in February last year revealed a lot of issues ranging from soil pH, limited nutrients and organic matter content in the soil.

2015 – The International Year of Soils

This worrying state of soil affairs, against the backdrop of unprecedented population growthwhich will require an increase of approximately 60 percent in food production by 2050, means that business-as-usual cannot be an option going forward. Driven by an increasing awareness that soil health is at the root of planetary, agricultural and, of course, human health, the Food and Agriculture Organization of the United Nations (FAO) has declared 2015 the International Year of Soils in an effort to raise awareness and promote more sustainable use of this critical resource. It notes that unless new approaches are adopted, globally, arable and productive land per person in 2050 will be one-fourth of the level in 1960. Healthy soils not only are the foundation for food, fuel, fibre and medical products, but are also essential to our ecosystems, playing a key role in the carbon cycle, storing and filtering water, and improving resilience to floods and droughts. This, of course, is an incredibly timely initiative in light of a series of serious challenges impacting our future and perhaps our very existence that we should all surely embrace with open hearts and willing hands!

What is a healthy soil?

But what constitutes a healthy soil? FAO defines soil health as the capacity of soil to function as a living system, with ecosystem and land use boundaries, to sustain plant and animal productivity, maintain or enhance water and air quality, and promote plant and animal health. Healthy soils maintain a diverse community of soil organisms that help to control plant disease, insect and weed pests, form beneficial symbiotic associations with plant roots; recycle essential plant nutrients; improve soil structure with positive repercussions for soil water and nutrient holding capacity, and ultimately improve crop production.

The concept of soil health captures the ecological attributes that are chiefly those associated with the soil biota; its biodiversity, its food web structure, its activity and the range of functions it performs. At least a quarter of the world’s biodiversity lives underground. Such organisms, including plant roots, act as the primary agents driving nutrient cycling and help plants by improving nutrient intake, in turn supporting above-ground biodiversity as well. This biological component of the soil system highly depends on the chemical and physical soil components.

There is a price to pay

The green revolution of the past century has seen the constant removal of soil minerals and a loss of two-thirds of the humus that helps to store and deliver those minerals and on which the organisms depend. It is a no-brainer to recognise that every time we harvest a crop from a field, we are removing a little of the minerals that were originally present in those soils. We replace a handful of them, often in an unbalanced fashion, and we decimate our soil life with farm chemicals, many of which are proven biocides. And when we decimate this ‘microbial bridge’ between soil and plant there is a price to pay. The plant suffers, in that it has less access to the trace minerals that fuel immunity, and the animals and humans eating those plants are also compromised. Restoration of this microbe bridge between soil and plant through sustainable soil management is key to the achievement of food security and nutrition, climate change adaptation and mitigation and overall sustainable development. How do we do this?

Composting

Composting, the accelerated conversion of organic matter into stable humus, is much more than just that. When compost is added to the soil it stimulates and regenerates the soil life responsible for building humus. Compost serves as a microbial inoculum to restore your workforce. A teaspoon of good compost can contain as many as 5 billion organisms and thousands of different species. These beneficial microbes increase biodiversity and the balance of nature that comes with it. This balance can create a disease-suppressive soil where beneficial organisms neutralise pathogens through competition for nutrients and space, the consumption of plant pathogens, the production of inhibitory compounds and induced disease resistance through a plant immune boosting phenomenon called systemic acquired resistance. Vermicompost (compost produced from worms) such as VERMITECH® is a superior type of compost containing worm castings (worm poop). Castings are loaded with beneficial microorganisms which continuously build fertility in the soil. They are very high in organic matter and humates which are both extremely important to plant and soil health.

Mycorhiza

Mycorrhizae is a general term describing a symbiotic relationship between a soil fungus and plant root. Mycorhizal fungi have been lauded as the most important creatures on the planet at this point in time. A part from enhancing plant growth and vigour by increasing the effective surface area for efficient absorption of essential plant nutrients, these organisms produce a carbon-based substance (called glomalin) that, in turn, triggers the formation of 30% of the stable carbon in our soils. These fungi are endangered organisms as we have lost 90% in farmed soils. Dudutech, for example, has developed a mycorrhizal inoculum called RHIZATECH® allowing farmers to effectively reintroduce these important creatures into farmlands. Compost also has a remarkable capacity to stimulate mycorrhizal fungi.

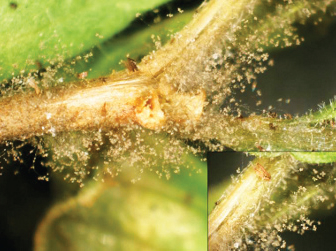

Pest antagonists

Soil degradation earlier explained above disturbs the balance of nature that keeps pest organisms in check, leading to an upsurge of pests (including diseases). Re-introduction of antagonistic fungi such as Trichoderma (TRICHOTECH®) that attack fungi causing root rots such as Fusarium, Rhyzochtonia, Pythium etc and nematode attacking fungi such as Purpureocillium (formerly Paecilomyces) (MYTECH®) that attack plant parasitic nematodes such as root knot nematodes is another sustainable way of restoring this balance.

Protect soil life

Strategies that promote the survival of soil life and their humus home base must be promoted. Moreover, there is no point in reintroducing beneficial microbes with one hand and then promptly destroying the new population with the other. The use of un-buffered salt fertilisers kills many beneficials and overtillage destroys mycorhiza. However, the single most destructive component of modern agriculture, in terms of soil life, has been pesticides. Even some ‘safe’ herbicides are more destructive than fungicides in destroying beneficial fungi.

Manage nitrogen

Mismanagement of nitrogen is a major player in the loss of humus. Excess nitrogen stimulates bacteria, and in the absence of applied carbon, they have no choice but to feed on humus. A carbon source should, therefore, be included with all nitrogen applications. We need to regulate N applications (e.g. by adopting foliar application of N) and to include a carbon source such as molasses, manure or compost with every nitrogen application. The carbon source offers an alternative to eating humus.

Turning point

The UN declaration of 2015 as the International Year of Soils is a timely wake-up call encouraging a focus upon the importance of the thin veil of topsoil that sustains us all in so many ways. It is not too late to recognise past mistakes and move forward to make this critically important year the turning point. The good news is that the Kenyan agricultural sector is well endowed with a broad range of expertise that is well positioned and ready to assist commercial growers and rural communities develop production systems that are economically viable and environmentally intelligent.

The author is the Training Manager at Dudutech

Listening to the continuity announcer, she announced weather forecast for the next five days as cloudy, cool and rainy weather conditions. These low light, humid conditions combined with a near full greenhouse floral crops meant Botrytis blight outbreaks. My crop was especially vulnerable now since they had a full flower canopy filled to the maximum allowable space.

Listening to the continuity announcer, she announced weather forecast for the next five days as cloudy, cool and rainy weather conditions. These low light, humid conditions combined with a near full greenhouse floral crops meant Botrytis blight outbreaks. My crop was especially vulnerable now since they had a full flower canopy filled to the maximum allowable space.

Immediately, my Production Manager called me, “we are in danger of contracting Botrytis”, he started. “Botrytis is a fungal disease that can cause leaf spots, petiole blighting and stem cankers on

As the curtain of the 4th edition of the International Floriculture Trade Expo (IFTEX) 2015 opens from 3rd to 5th June, there will be no room for any shortcoming that may hamper its success. All minds and hands that know what it entails have been up and down trying to put different pieces together to make what can be seen as the true spirit of the regional horticultural industry.

As the curtain of the 4th edition of the International Floriculture Trade Expo (IFTEX) 2015 opens from 3rd to 5th June, there will be no room for any shortcoming that may hamper its success. All minds and hands that know what it entails have been up and down trying to put different pieces together to make what can be seen as the true spirit of the regional horticultural industry.

The event, since its inception in 2012 has continued to aggressively spread its tentacles world over, luring to its importance the most reputed and less comparable companies in various businesses of sorts.